Let’s assume that, after reading my colleague Joseph Majkut’s piece earlier this week about the Pat Michaels and Paul Knappenberger paper, you remain unconvinced. Recall that Michaels and Knappenberger argued that climate models have greatly over-predicted the warming we’ve actually seen in the temperature data, and that the climate is simply not as sensitive to greenhouse gas emissions as the IPCC believes.

Perhaps you are not persuaded by Majkut’s response because you believe that:

- Temperature records from the instrumental era are inherently more reliable guides to future warming than are paleoclimate records or outputs from climate models,

- Temperature data over the past couple of decades is more telling than data from other time periods within the instrumental data set,

- Correcting climate models to better reflect advances in knowledge about various forcings, such as solar variation and the impact of aerosols, is suspect, and/or

- Analyzing the temperature data with better statistical methodologies is likewise suspect.

Even so, the Michaels and Knappenberger analysis fails to persuade. That’s because comparing the average global temperature created by climate models and the global average temperature from observationally-based datasets—the heart of the Michaels and Knappenberger exercise—is to compare apples with oranges. The global temperature records use a blend of air and sea-surface temperatures, while global average temperatures from climate models typically use just air temperatures. While that might seem to be a minor divergence, it is not … and it has an important impact on the underlying records.

The difficulty arises because oceanic sea-surface temperatures warm up at a much slower rate than those above land. It is thus no wonder that a blended dataset containing ocean and air temperatures warms slower than a dataset containing just air temperatures. A further bias is related to the greater areas of the Arctic Ocean that have become sea ice-free due to climate change. Temperatures that would have historically been taken above the surface of the sea ice—as air temperatures—are now taken at the colder values of the ocean surface.

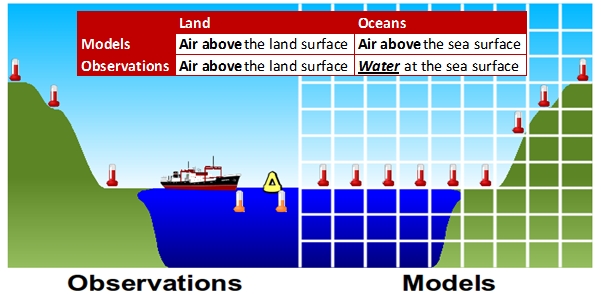

Accordingly, the output from climate models has to be changed to match the data from observations so as to create an apples to apples comparison, as shown in Figure 1.

Figure 1: Global temperatures from models are calculated using air temperatures above the land surface and also from the upper few meters of the ocean. Models use the air temperature across all surfaces. Image Source.

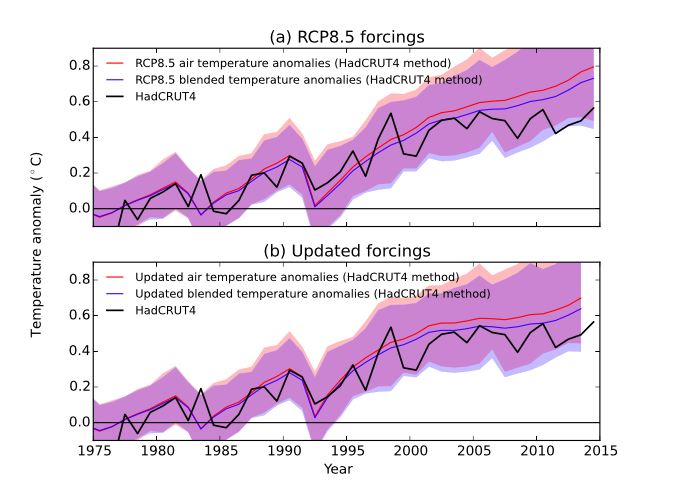

A recent study by Cowtan et al. (paper here) suggests that accounting for these biases between the global temperature record and those taken from climate models reduces the divergence in trend between models and observations since 1975 by over a third. The correction consigns much of the divergence in absolute temperature between the climate models and the instrumental data to the last decade, as shown below.

Figure 2: Comparing apples-to-apples sampling of climate model output (blue) and the instrumental record for temperature (black), instead of air temperatures (red), resolves some of the difference model-data divergence [Panel (a)]. Further adjusting for errors in how the model simulations were constructed, as we previously discussed, does more to resolve model-data divergence [Panel (b)]. [Image Source: Cowtan et al. 2015, Supplement.]

The figure above from Cowtan et al. shows how accounting for the apples to oranges problem reduces the divergence between climate models and instrumental data in temperature. But the Michaels and Knappenberger analysis considers difference in temperature trends. Cowtan et al. have posted climate model output that reflects how the instrumental data are collected. Applying the Michaels and Knappenberger trend test to that output reduces the number of years rejected by the test by about half and resolves misfit for trends longer than 30 years.

Some years may still “fail” the Michaels and Knappenberger test, but keep in mind some important factors.

- Recent papers by Cowtan and Way (2014) and Karl et al. (2015) showed that filling in gaps in the observational record where there are limited observations (such as at the poles and in Africa) and updating global sea-surface temperature records led to an increase in the rate of warming in the global temperature record throughout the last 10-15 years, further reducing the model-observation data gap.

- There is a reason climate scientists warn against using trends less than 17 years, and much prefer 30 years as a minimum. Natural variability of the climate system can skew the short term trend and temporarily mask the long term one. Trends longer than 30 years should not be rejected by the Michaels and Knappenberger test when the apples-to-apples correction is made.

Science is a learning process. We’ve learned since Michaels and Knappenberger’s initial analysis that adjusting for differences in how global temperature is represented in models and observations is important. Also important is understanding how these models are forced. The upshot is that rejecting climate models as too sensitive to carbon because of model-data misfit is an erroneous exercise —as argued by Marotzke & Foster this year— absent at least the considerations discussed here.

[Photo credit: Ansgar Walk via Wikimedia commons.]