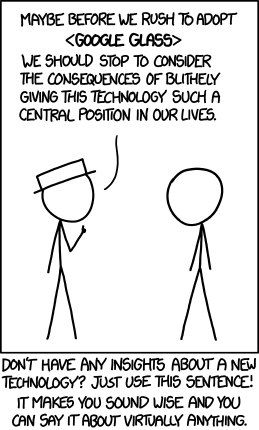

I recently came across this old 2013 post at the Technology Liberation Front. In it, Adam Thierer points to an old XKCD comic that perfectly encapsulates a particular sentiment often showcased in policy discussions surrounding emerging technology regulations.

The idea that the best way to address concerns with new technologies is to “consider the consequences” is all-too common in policy conversations. But what does that actually mean in wonk-speak? Adam has some thoughts:

[A]fter conjuring up a long parade of horribles and suggesting “we need to have a conversation” about new technologies, authors of such essays almost never finish their thought. There’s no conclusion or clear alternative offered. I suppose that in some cases it is because there aren’t any easy answers. Other times, however, I get the feeling that they have an answer in mind — comprehensive regulation of new technologies in question — but that they don’t want to come out and say it because they think they’ll sound like Luddites. Hell, I don’t know and, again, I don’t want to guess as to motive. I just find it interesting that so much of the writing being done in this arena these days follows that exact model.

Like Adam, I’m increasingly wondering what the “let’s have a conversation” crowd is actually aiming for. What’s the end goal they expect from these conversations? Calls for a “broad societal consensus” or an approach that embraces the “consent of the governed” or “opening doors to conversation” don’t illuminate clear, articulable, and actionable policy recommendations. Even when a forum for dialogue is convened (such as a multistakeholder process or soft law proceeding that embraces ongoing collaboration), someone always claims that there remains an elusive “other conversation” that is not being had. Thus, real solutions remain forever beyond our grasp.

I don’t want to impugn or question anyone’s motives—doing so is seldom productive, and certainly not conducive to arriving at anything approaching a reasonable compromise. Unfortunately, the vagueness with which the “conversation” advocates address emerging technology issues leaves little room to conclude that their positions are the product of anything other than a disguised anathema towards technological progress. If that’s the case, they should state it clearly. If not, then they should state that clearly. In his book A Dangerous Master, Wendell Wallach does at least that much for us:

[T]he cavalier adoption of technologies whose impact will be far-reaching and uncertain is the sign of a society that has lost its way. Moderating the adoption of technology should not be done for ideological reasons. Rather, it provides a means to fortify the safety of people affected by unpredictable disruptions. A moderate pace allows us to effectively monitor risks and recognize inflection points before they disappear. (p. 262)

I disagree with this perspective, but at least I know what his opinion is and where we come down on our disagreements. I can’t say the same for those championing the “let’s have a conversation” mentality.

Is it indeed a crypto-luddite mentality driving these perspectives? I can’t say for certain, but one thing is clear: these types of recommendations lack substance. And in the absence of substance, a policy recommendation becomes an empty vessel that can be filled with pretty much any idea under the sun. Any proposal that can mean all things to all people warrants skepticism, especially if it takes the form of a recommendation for policymakers.

So the next time you hear someone advocate a conversation as a solution to the concerns associated with a new technology, beware—it may simply be the hue and cry of a crypto-luddite.